Jon Krohn: 00:02

This is Five Minute Friday on Resilient Machine Learning.

00:19

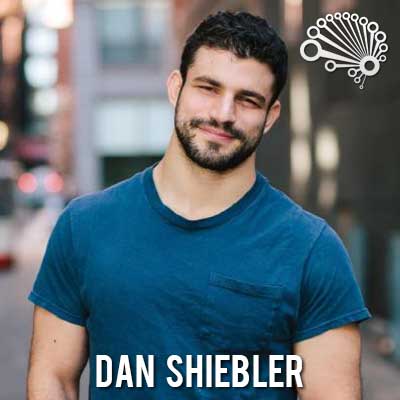

Like last week’s Five-Minute Friday, today I’m having a short five minute-ish conversation with a preeminent data science speaker that I met in person at the Open Data Science Conference West in San Francisco. That’s ODSC West for short. Our guest today is Dr. Dan Shiebler, who describes how we can make machine learning models resilient in production.

00:40

All right, I’m here with Dan Shiebler, who is head of machine learning at Abnormal Security. It’s a cyber attack detection company that fights cyber crime with machine learning. Dan Shiebler has been on many Super Data Science episodes, starting with episode number 59 many years ago. Most recently he was in episode number 451. He was one of my first guests when I took over as host of the show from Kirill Eremenko.

01:07

So this is your first time in those many appearances, Dan. as Dr. Dan Shiebler. Congratulations. Dan recently defended his doctoral dissertation at Oxford in applications of category theory in machine learning. And if you’re interested as to what category theory is, we discuss that at length in episode number 451, so you can refer back to that.

01:30

For today’s Five-Minute Friday episode of Super Data Science, I’m asking Dan about a specific topic. His ODSC West talk in 2022 was on Resilient Machine Learning. So Dan, can you tell us what is Resilient Machine Learning? Why is it important and how can our listeners implement it?

Dan Shiebler: 01:51

Absolutely. Thanks, Jon. Happy to be back. So Resilient Machine Learning is really about building machine learning systems that are capable of maintaining efficacy and maintaining good performance even when catastrophically bad things happen.

02:08

Anyone who’s worked on software systems in the past understands that there are components of systems that might break. It’s always the case that there’s a system that might feed features. For instance, perhaps your machine learning model is going to expect certain features about users that it’s going to utilize to generate predictions. Those features about users are perhaps provided by a service that might go down under certain circumstances.

02:35

How do you build a machine learning model that is still able to function when these services may go down? How do you prevent the application of your model from being dependent on the full guaranteed uptime of all of the systems that populate the features that these models are operating on? The crux of this is to understand really two core concepts. One is defaults, which is how do you represent the fact that a particular feature is missing to your model? And how do you equip your model with the ability to still generate a good prediction even when that feature is missing?

03:14

A simple way to think about this is to think about this example with user features. Perhaps there’s a set of features that are all populated by this user service that feed into your model and that your model utilizes to make a prediction. What we can do is simply add an additional feature, what we would call an indicator, to indicate that these features are present or that they’re absent. Then we can take our training data and clone it, take two copies of our training data, one of which has these features and has the indicator variable set to present, and the other of which misses these features and has the indicator variable set to absence.

03:45

Now, our model through the training process learns to behave both when these features are present and when these features are absence. When we launch this model into production, we can serve the features, or stop serving the features when the system goes down, and still see good performance.

Jon Krohn: 04:01

Nice. Gotcha.

Dan Shiebler: 04:02

And the other concept is fallbacks. Sometimes we have not just a single model, but multiple models, each of which can operate on different degrees of feature availability. For instance, perhaps we have one system that’s able to operate just on a raw object. Perhaps we have a single impression event on Twitter or something like that that indicates the parameters of a particular user visiting the website. And in order to generate a really, really good prediction, we want to pull in all these other sources of data. But we want to still be able to generate a prediction even with just that bare object.

04:41

So what we can do is we can build one system that just generates a really simple quick prediction on that bare object, other systems that then rely on these other pieces of information being pulled in that generate predictions as these pieces of information are pulled in. And then what we can do is have our system be able to iterate on the core prediction to begin with, that is on the lightweight object, even if there’s a high latency on the response of the individual features that we’re pulling in, or if these services are even down.

Jon Krohn: 05:12

Right. Cool. I love that. That was such a concisely described explanation of Resilient ML. So Resilient ML is important because it allows us to have machine learning models that still work, even if some features from the full complement of possible features aren’t available to the model. And two of the key ways that we can implement Resilient ML are with defaults and fallbacks. Thanks so much, Dan for this, yeah, tour, this quick tour of Resilient ML on this Five-Minute Friday. Thank you so much for taking the time and hopefully we’ll have you on for a full guest episode of Super Data Science very soon.

Dan Shiebler: 05:49

Can’t wait. Thanks for having me, Jon.

Jon Krohn: 05:52

Okay, and that’s it for this special guest episode of Five-Minute Friday, filmed onsite at ODSC West. We’ll be back with another one of these soon. Until next time, keep on rocking it out there, folks, and I’m looking forward to enjoying another round of the Super Data Science Podcast with you very soon.