Jon Krohn: 00:00:00

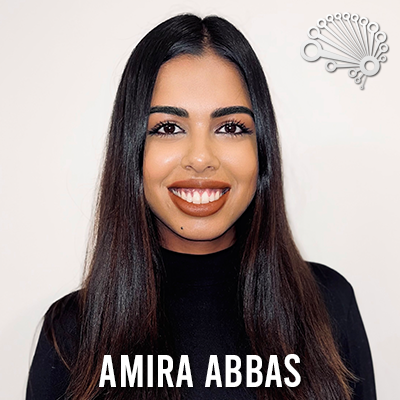

This is episode number 721 with Dr. Amira Abbas, Quantum Computing Researcher at the University of Amsterdam. Today’s episode is brought to you by Gurobi, the Decision Intelligence Leader, by ODSC, the Open Data Science Conference, and by CloudWolf, the Cloud Skills platform.

00:00:22

Welcome to the Super Data Science Podcast, the most listened-to podcast in the data science industry. Each week, we bring you inspiring people and ideas to help you build a successful career in data science. I’m your host, Jon Krohn. Thanks for joining me today. And now, let’s make the complex simple.

00:00:53

Welcome back to the Super Data Science Podcast. We’re extremely fortunate to have the brilliant and eloquent, Dr. Amira Abbas on the show today. Amira is a postdoctoral researcher at the University of Amsterdam, as well as QuSoft, a world-leading quantum computing research institution that is also in the Netherlands. She was previously on the Google Quantum AI team and she did Quantum ML research at IBM. She holds a PhD in Quantum Machine Learning from the University of KwaZulu-Natal, which is in South Africa. While she did that, she was a recipient of Google’s PhD fellowship. Much of today’s episode will be fascinating to anyone interested in how quantum computing is being applied to machine learning. There are a few relatively technical parts of the conversation that might be best suited to folks who already have some familiarity with ML.

00:01:39

In this episode, Amira details what quantum computing is, how it’s different from the classical computing that dominates the world today, and where quantum computing excels relative to its classical cousin. She fills us in on key terms such as qubits, quantum entanglement, quantum data, and quantum memory. Critically, she fills us in on where quantum machine learning shows promise today, as well as where it might in the coming years. She tells us how to get started in quantum ML research and she provides us with today’s leading software libraries for quantum machine learning. All right. You ready for this mind-blowing episode? Let’s go.

00:02:20

Amira, welcome to the Super Data Science Podcast. How are you doing today? Where in the world are you calling in from?

Amira Abbas: 00:02:26

I’m good, thanks. So I’m calling in from South Africa actually, but thanks for inviting me. Thanks for having me. It’s really nice to be here.

Jon Krohn: 00:02:33

Nice. Yeah. We do get to enjoy your South African accent, which is wonderful, and our audience gets to enjoy my very sick, very deep voice. We’ll probably edit out my hacking coughs, but those are going to be happening in the recording at least. Yeah, the show must go on. We have such amazing topics planned for you today, Amira. I think we’re going to jump right into the technical questions because I want to squeeze in as much as we possibly can. I’ve been so excited. I’ve been excited, for years, to record an episode on quantum machine learning. Now that it’s happening with an expert like you, I just… I woke up this morning, even with my cold, it felt like Christmas morning or something, where I was like, “Oh my God. I’m going to learn so much today.”

00:03:27

You recently joined the University of Amsterdam as a postdoctoral researcher where you’re working on quantum computing. You specialized in quantum machine learning in the past, and that might still be something you’re doing at Amsterdam. For our audience who might be new to both of these topics, quantum computing as well as quantum machine learning, can you provide a brief overview of these topics and why it’s gaining so much attention?

Amira Abbas: 00:03:53

Yeah, sure. Maybe we can start with quantum computing both. I think most people have heard about this, but the short story is that we’re trying to build computers and we already have small-scale devices that work on different principles. They work on different rules, and these rules are what is described by quantum physics. The hope is to try and build these computers that work on quantum physics that we believe actually is a true description of nature. Hopefully, with these new computers, we can start to do more interesting things by being able to model things more efficiently and at larger scales. Quantum computers are quite beautiful, theoretically, and a lot of companies and universities are trying to build these things and scale them up. Now, of course, if we can imagine having quantum computers, which in principle should be able to do more interesting and hopefully more powerful things, the next step or the next question to ask is can we use them for things like machine learning to make machine learning a little bit more interesting and more powerful?

00:04:57

This then, combining these two things, quantum computers with machine learning, introduced the skill of quantum machine learning. Instinctively, you might think, “Oh, well, it’s very obvious that we can make machine learning better if we put quantum computers, more powerful devices in there,” but what we’re realizing after a couple of years is that it’s not so straightforward. There’s a lot of things within quantum computing that makes information very delicate and intricate, and trying to use this to do machine learning tasks in a more efficient or better way is not that easy. This is the broad level concepts, is trying to do machine learning better in some way, but while using quantum computers. Yeah. That’s what I’ve been trying to do for the last couple of years and what I’m still hoping to do a little bit of from the more theoretical side now at Amsterdam.

Jon Krohn: 00:05:46

Nice. I mean, feel free to dig even more into the technical aspects of this. For example, the key distinction, as far as I’m aware as an extreme novice with quantum computing, is that a key difference is that when we’re working with classical computing, I guess we can call it in contrast, with classical computing, we have bits that are either zeros or ones. But with quantum computing, they’re qubits and they’re not clearly zeros or ones. They’re like a probability distribution or something more like that.

Amira Abbas: 00:06:24

Yeah, that’s a beautiful way to describe it and think about it. Exactly. When we talk about qubits in a quantum computer, you can exactly think about you have some sort of quantum mechanical system and these are just like a probability distribution over bits. Ultimately, when you want to observe the state of your system, this is famously known as the quantum measurement collapse problem. When you look at the distribution of your bits, or you try to understand, or analyze it, or measure it, then you immediately see a collapse to one possible combination of bits. Classical information again. But there’s a subtle difference here because you might think, “Well, if it’s a probability distribution over zeros and ones, how is this any different from making a classical computer probabilistic?” Quantum computing is a little bit special in that the probability distribution is actually described better by what we call probability amplitudes rather than numbers between zero and real numbers.

00:07:25

These probability amplitudes can take complex values, and so the distribution is a little bit different in that qubits can actually interfere with each other. We can create what’s called quantum interference. Probability amplitudes can be amplified or cancel each other out. You can get weird strange quantum effects or quantum phenomena through this interference that you can’t see with just making bits probabilistic in a classical sense. These probability distributions are a little bit different. As I alluded to earlier, the rules that govern quantum computers and these qubits, these quantum bits of information, is quantum mechanics. Quantum mechanics is that the evolution of your quantum system or whatever physical system you have, the evolution is linear, so the dynamics that describe the evolution within these computers is linear. If it’s not, then you also get weird crazy things that can happen.

00:08:22

I think you violate some fundamental physical properties, maybe time travel becomes real and all these other strange things. The dynamics have to be linear. Yes, and I think importantly, what’s easy to remember or what’s important to remember for quantum computers is that you start off with some initial state, which can be described, as you mentioned, as a probability distribution over zeros and ones over bits. You evolve this state with linear dynamics, so you can apply some linear operations. As a computer scientist, what might make this easy to think of is that quantum computing is described by a lot of linear algebra. Your initial state can be thought of as a very, very large vector, and the linear dynamics is just applying a matrix multiplication to this vector involving it. These matrices and vectors have certain constraints attached to them.

00:09:18

Eventually, when you want to measure your system, then you observe a particular bit string and you can repeat your experiment over and over again to get an understanding of the true probability distribution that describes your quantum output or the bit string that you’re expected to measure. This is quantum computing. Of course, machine learning fits very naturally on top of this because machine learning is also a lot of linear algebra. What we’re trying to do, in the sense of quantum machine learning, is try to understand a couple of things. How can we encode data into these quantum states and evolve these quantum states that encode data in interesting ways so that we can measure outputs that look like labels for a machine learning task, or maybe a probability distribution that we’re trying to generate for a machine learning setting? These are the kinds of high level questions that one can answer.

Jon Krohn: 00:10:10

Let me try to paraphrase that back to you. With quantum computing, we have qubits instead of bits, and these qubits have probability amplitudes that have all kinds of delicateness, but also nuance to them that mean that they can represent information for some kinds of problems more efficiently than we can with classical computing. You mentioned there specifically that there’s linear algebra operations in the way that we interpret what goes on in these qubits. Because of that linear algebra, which if we have listeners out there who have been figuring out how to use machine learning algorithms themselves at home as opposed to using scikit-learn as some kind of high level abstraction, if you try to implement it yourself, you quickly realize that there’s lots of linear algebra operations that underlie that machine learning algorithm, and so similarly, you can take advantage of those linear algebra operations that we use in typical quantum computing and apply those to try to solve machine learning problems. Was that a reasonable-

Amira Abbas: 00:11:21

Yes, brilliant.

Jon Krohn: 00:11:21

… paraphrase?

Amira Abbas: 00:11:23

Yes. Feel free to ask more detailed questions. I know I’m speaking at a very high level and it might sound all abstract, and if I use any jargon that doesn’t make sense, just let me know.

Jon Krohn: 00:11:35

Yeah, let’s go into the time travel a bit more.

Amira Abbas: 00:11:39

Oh, no. Yeah. Yeah, yeah. This gets very science fiction-y very fast, but yeah, the time travel aspect.

Jon Krohn: 00:11:47

Well, but actually some aspect of quantum computing that is very science fiction-y, but as far as I can understand is quite real, is quantum entanglement. Do you want to dig into that one a bit?

Amira Abbas: 00:11:58

Yeah, sure. I think entanglement sounds very spooky at first. A lot of people think, “Wow, this is something crazy that two fundamental particles can become entangled. No matter how far you separate them in space, one tells you information immediately about the other, instantaneously about the other.” But mathematically, this is actually quite simple what entanglement means. It’s really very straightforward. In fact, I encourage you to even Google how to describe or how to even entangle two qubits. I mean, this is really something quite trivial. To me, I like to think in terms of math. Nothing is really spooky or weird here. It’s just that you cannot describe basically the state of one qubit without knowing the state of the other when they’re entangled. People get the impression that, “Wow, this is so strange because you can separate these things very far in space and it’s almost like you immediately have information about something you shouldn’t.”

00:13:08

Because if something is really, really far away, then I think Einstein said that information shouldn’t be able to travel faster than the speed of light. Initially, entanglement was thought to violate this, but actually not so, because even though you kind of know the state of another qubit that’s super far away, in order to communicate any information, you still have to send it somehow through a classical channel or something like that across space, so there is no violation. To me, there isn’t anything spooky, but entanglement is indeed a resource for quantum computing. It is shown that entanglement is useful in a couple of settings for things like communication complexity and other fields outside of machine learning. Indeed, it is a resource. It is a correlation that can exist between particles or qubits, if you want to call them that, that cannot be observed outside of quantum mechanical experiments. Of course, it also largely was the reason that the Physics Nobel Prize went to the Bell experiments and stuff like this, I think, last year. Yeah, it’s pretty cool.

Jon Krohn: 00:15:06

What if you, as a data scientist, could not only inform decision-making but also drive it? As a leader within your organization, imagine confidently harnessing provably optimal decisions. Gurobi Optimization — the leader in decision intelligence technology — equips you to unlock the power of mathematical optimization and transform your organization. Trusted by 80% of the world’s leading enterprises, Gurobi’s cutting-edge optimization solver, lightweight APIs, and flexible deployment ease the transition from data to decision. Visit gurobi.com/sds to get exclusive access to a competition showcasing optimization’s importance, with prizes for top performers. That’s G-U-R-O-B-I.com/sds.

00:15:08

All right. Us talking about this, I was going to ask how do the qubits get entangled? But actually, I realized that I have to ask an even more fundamental question before I can do that, which is what is a qubit anyway? What’s getting entangled when the qubits are entangled?

Amira Abbas: 00:15:26

Yeah, this is a good question. I should probably also start by declaring that I’m not an experimental physicist. Experimentalists are really the ones in the lab building these qubits and building these quantum computers. I just sit with my pen and paper, but I think how we can think about it is quite simple. In nature, we have a couple of fundamental particles that make up other particles and other things, but these fundamental particles, when we try to describe them, when we zoom into these tiny scales on these very small particles, this is when we realize that the classical physics we learn in high school starts to break down. This is when we realize that the true description is quantum. Now, what physicists are trying to do and people in the labs are trying to say, “Okay. How do we force these fundamental particles to actually exhibit quantum properties?”

00:16:21

The answer is, it depends. It depends which particle we have, and then it depends on what we need to do to exhibit this quantumness. For example, there are companies and institutions trying to build quantum computers out of photons. These are photonic-based quantum systems. A photon, you can think of as just like a pocket of light. You have a laser and you have photons that come out through this laser, and each photon can be thought of as a qubit. You can manipulate photons in certain ways, and these can act as your qubits, but you can also make qubits out of electrons, for example. There are certain types of electrons that exhibit quantum properties when you cool them down to very, very low temperatures. These are the beautiful chandelier quantum computers that you often see pictures of with, I think, IBM and a couple of other companies trying to do these.

00:17:14

Yes, so I think photons and electrons, these superconducting quantum computers or qubits are probably the most popular ones you will see. But I think there are also other companies and people trying other things, for example, with ions, which are also a different particle. They’re trying to create, for example, IonQ, which is another company trying to create qubits out of ions and using ion traps and so on. Yes, so I hope this gives a little bit of the intuition, is that basically there are a lot of fundamental particles and if we do different things to them, we can start to see quantumness. To be honest, we have small-scale devices with a couple of different attempts or different particles, but we don’t know how to scale them up to large sizes that matter yet. This is still an engineering problem that we have to figure out, and which one of these will win the race is something that I cannot say. I think a lot of different people will have different opinions, but it will be a very exciting next couple of years to see what wins.

Jon Krohn: 00:18:14

Yeah. Right, right. Yeah. It just occurred to me, and maybe this is obvious to a lot of our listeners, and maybe I knew this before but I just repieced it together, is that qubit, Q-U-B-I-T, that must stand for quantum bit. That’s probably the…

Amira Abbas: 00:18:30

Yeah, exactly.

Jon Krohn: 00:18:33

Which is probably where I should have started. But yeah, so these qubits can be made up of photons, electrons, or ions, potentially other materials. Yeah. As you’re saying, the kinds of quantum computers that we have today, how many qubits can we have in one quantum computer today?

Amira Abbas: 00:18:54

Yeah. I guess, again, it also depends on the system. I think IMQ in particular have over 100, for sure. IBM, Google, they have also in the hundreds. There are also some roadmaps for some of these companies where they project they will have a couple thousand qubits in the next year or two, so this will be quite exciting. But to just give you an idea, so everything we have is, I guess, in the order of hundreds, maybe if we’re lucky, a thousand in the next couple years or so. But just to give you a sense of, these qubits are still very noisy, meaning they house a lot of error. They’re difficult to manipulate, they’re difficult to keep the quantumness in them, so they’re very hard to use for practical purposes. I think only once we get to the order of millions will we be able to start to see interesting useful applications of quantum computers and quantum machine learning as well.

Jon Krohn: 00:19:56

Right, right, right. Yeah. I guess, as a very loose parallel, we’re kind of where we were in the 1950s or something with classical computing in terms of the number of bits that we can fit on a transistor and the complexity of the computations we can do. Although my understanding, and you can tell me I’m completely wrong, but my vague understanding is that because a qubit has so much more expressiveness than a classical bit, you can do more with fewer of them. It still makes sense to me that, okay, if we’re going to be doing machine learning, we’re going to need millions of qubits. That makes sense to me. But it seems like the kinds of problems that we’re seeing quantum computers solve, they’re able to solve much more complex problems than a classical computer with that same number of bits would be able to do.

Amira Abbas: 00:20:59

Yeah. I’m so glad you brought this up because maybe I didn’t explain it too well initially, because indeed you’re right. I think people love to make the statement that would just… I forget the number, 120 qubits or something, a quantum computer can already hope store more pieces of information the number of atoms in the observable universe. It sounds strange when I say to you that you need millions of qubits to do some interesting things, and that’s because I left out an important piece, is that because these qubits are so noisy, we need to perform what’s called error correction on top of that. We really need to be able to remove this error by having more qubits in there, creating more redundancy to be able to actually do accurate simulations or run accurate algorithms. We need more qubits in there, not for storing information and doing things, computing interesting things, but rather for correcting these errors that we see. We need quantum error correction to work, and this is why the number goes up into the millions to do interesting things.

Jon Krohn: 00:22:14

Yeah. That is super interesting, and I had no idea about that. Isn’t that also, that error correction, is that related to… Maybe I’m completely misremembering this, but when we do quantum computing experiments, we often will run it several times to try to get a reliable average of the answer?

Amira Abbas: 00:22:34

It’s a little bit, but it’s a little bit different. I should also say, I’m speaking out of my breadth of expertise here by talking about error correction, because there are a lot of researchers just focusing on this area alone. You’re right. A quantum computer, like I said, you start with some initial states, like some quantum state, and you can think of this as a vector. You apply some linear operations that you evolve your quantum state, and then what you can do at the end of your quantum experiment, you can observe your system, meaning you can get a classical bit string out of it. You can see what it collapses to. But as you just said, this is just one of many possible bit strings we can observe. To get an accurate description of the probability distribution of a bit strings, you run your experiment multiple times and you get an average.

00:23:24

But error correction is a little bit different in that when you actually physically evolve your system on a quantum computer, so when you apply individual gates to your qubits. Meaning, let’s say you have a qubit in state zero and you want to flip it to state one, so you apply in what’s called an X-gate, similar to classical computing. Because there’s noise in these qubits, these gates that you apply are sometimes not doing what they’re supposed to do. Error correction means like, “Let’s have some buffer qubits in there to make sure that the operations that we apply are actually the operations we want to see.” They ensure quality of our actual operations in the quantum computer and not just about the output.

Jon Krohn: 00:24:12

Very cool. All right. Yeah. I’m grasping here that with classical computing, classical bits, we probably, since the very beginning, probably since transistors were invented, something about them is that they are very reliably either a zero or a one. We can be quite confident about what’s going on with them. With quantum computing, as you’ve mentioned already in this show, just keeping them in that quantum state is quite fragile and there’s a lot of nuance in what we can interpret out of them. But simultaneously, because of this fragility, we need lots of repetition, or redundancy, or error correction to be able to collapse reliably into some classical result.

Amira Abbas: 00:25:05

Yeah, exactly. I think it’s also important to say that, classically, we need error correction as well. We’ve just managed to do it on classical computers in a much more efficient manner. We still have to figure out how to scale this up in a better way on a quantum computer, I think we largely have a lot of good ideas. But because quantum information, like you just mentioned, is so fragile, it’s not very easy. Also, there exists a lot of fundamental problems. For example, there is this no-cloning theorem, meaning we can’t just copy one state over to another… You can’t just copy the state of a qubit over to another. Whereas classically, you can do this, so you can create redundancy and copies rather easily. This helps you with things like error correction. But on the quantum side, there are barriers to this. We have to do things a little bit more cleverly, and I think this is where all these ideas of quantum error correction come into play. Yeah. It’s a super nice area.

Jon Krohn: 00:26:07

Nice. Yeah, super interesting. So really quickly before I start getting more into your area of expertise and really getting into quantum machine learning versus classical machine learning, that kind of stuff, just really quickly at a high level in terms of the hardware to make this work, you’ve probably seen… I’ve never seen a quantum computer, but you’ve probably seen them. What are they like? How big are they? Do they need super cooling or something to work? Just at a high level, give us some color on what these quantum computers are like?

Amira Abbas: 00:26:41

Yeah, sure. So I’ve been super lucky to see quite a few. So I think the first one I saw was IBM’s and the most distinct thing I remember about it was walking into the room and the sound that these cooling devices make is really strange. I think if you Google it, you can Google sound of a quantum computer and it’s like this strange machinery that sounds like… Every couple of seconds. To be honest, I’m not an experimentalist. I don’t really understand what all these machines are doing. I just remember also being so taken aback by the size. So this was a superconducting quantum computer that I saw, and it was this beautiful chandelier made of real gold hanging from the ceiling, all these crazy tubes and things coming out of it. I just thought, wow, this is a really impressive device.

00:27:34

Indeed these ones are the ones that need really, really cold temperatures down at the bottom of the chandelier. So the room itself is not cold. But yes, getting this part at the bottom super cold is the task. I was also really lucky to see a photonic-based quantum computer by a company in Toronto named Xanadu, which I think they’re now beyond startup status, so I won’t call them a startup, but they’re building, or they have built, photonic-based systems. There they have really cool offices and I think their quantum computers are on the, if I’m not mistaken, it’s on the top floor, on the penthouse of a really tall building in Toronto. So I remember doing a tour there, and this was also really beautiful to see. But yeah, they have these tabletops where all these lasers now matter and manipulating these photons is what’s important. I think the equipment is to get, for example, a table perfectly level is just crazy expensive. So there are a lot of effort and time and things that go into all these computers. But yes, I think these are the only two types I’ve seen so far. I haven’t seen an ion-based quantum computer. I’d love to. But yeah, they’re really beautiful.

Jon Krohn: 00:28:45

Cool, yeah, that was exactly the color I’m looking for, really rich descriptions. It’s really funny, that thing you mentioned about getting a table perfectly level, just an episode of Rick and Morty, where Rick creates a perfectly flat… He spends all this time getting a perfectly flat surface, and it’s obviously very silly, but then when people step on it, they’re like, “Oh. Oh my goodness, it’s so flat. It’s just so perfect.”

Amira Abbas: 00:29:09

Yeah, doesn’t he have to remove from Morty’s memory, the flatness because he steps on perfect level and he just freaks out too much. I think he has to wipe it from his memory, right?

Jon Krohn: 00:29:21

I think that’s right, yeah. Yeah, so you watch that show as well.

Amira Abbas: 00:29:25

Yes. My cat, his name is Morty, so yeah.

Jon Krohn: 00:29:32

That’s funny. Yeah, I’m in desperate need of a new episode. It’s been so long. I was just looking into this yesterday. At the time of recording, at least, it’s been almost a year since there’s been a new episode, so.

Amira Abbas: 00:29:44

Yeah, they do take their time.

Jon Krohn: 00:29:46

They do, but it’s worth it. Yeah, all right, so beyond our Rick and Morty recommendation, what else do I have for you? So yeah, so let’s dig into a bit more of your expertise on quantum machine learning. So what kinds of problems… I guess, and maybe it makes sense to ease into this, again, with the quantum computing again, as opposed to specifically quantum machine learning. But what kinds of problems can we solve with quantum computing that we might not be able to solve as efficiently with classical computing? Then, yeah, same question for quantum machine learning. Why do quantum machine learning? What’s the advantage of that relative to some classical approach?

Amira Abbas: 00:30:30

Yeah, this is a very good question, and to be honest, we don’t have a lot of peer concrete directions for broad problem classes that quantum computers can just immediately provide blanket advantages to. So the most obvious one is the one that caused all this excitement about quantum computing and got all the funding and things like this, and that’s this problem of prime factorization, which also goes into a lot of cryptographic schemes and so on. I think this idea of Shor’s factoring algorithm, which uses quantum computing to factorize very large numbers into their prime constituents, this was the game changer because prime factorization is believed to be a very hard problem for classical computers to solve. So I think the best known algorithms to approach this problem take exponential time on a classical computer. So what Shor did was introduce an algorithm on a quantum computer that takes polynomial time, so to solve this prime factorization problem.

00:31:37

So this was super exciting because finally there was a useful problem, something that’s used in a lot of things, and important things like cryptography and so on, where quantum computers seem to provide an exponential speed up and this is what we’re looking for. But unfortunately since then there hasn’t been many concrete things following up on this. There hasn’t been too many examples. So what researchers are trying to do now at a high level is look for these types of problems that quantum computers seem to be naturally suited for. This is this whole field of computational complexity theory, studying the hardness of problems and how much resources is needed to tackle them and where quantum computers can be beneficial. So to be quite frank, from this complexity theory point of view, factoring is the best thing we have so far. So we’re still trying to find other types of problems that seem to be suited for quantum computers. We don’t have any interesting, too much interesting things, I think to say there yet, but I’m hopeful that we will soon.

Jon Krohn: 00:32:47

Be where our data-centric future comes to life, at ODSC West 2023 from October 30th to November 2nd. Join thousands of experts and professionals, in-person or virtually, as they all converge and learn the latest in Deep Learning, Large Language Models, Natural Language Processing, Generative AI, and other topics driving our dynamic field. Network with fellow AI pros, invest in yourself in their wide range of training, talks, and workshops, and unleash your potential at the leading machine learning conference. Open Data Science Conferences are often the highlight of my year. I always have an incredible time, we’ve filmed many SuperDataScience episodes there and now you can use the code SUPER at check out and you’ll get an additional 15% off your pass at O-D-S-C.com.

00:33:34

Super interesting. So then, yeah, in terms of practical applications, yeah, the cryptography thing, you hear a lot. You also hear it a lot in the context of all of our passwords are going to be meaningless and the internet’s going to be a free for all, which I don’t know if you have thoughts on that. But I feel like I’ve also, and this is a vague memory, but somehow things like the traveling salesman problem, I feel like I’ve come across as something that is very hard for classical computers famously. So the traveling salesman problem, for any of our listeners that have done computer science is something that’ll be a really obvious problem. But with the traveling salesman problem, when you have say, three cities that a hypothetical salesperson needs to travel between, it’s very easy to figure out what’s the optimal route between the three cities to minimize travel time.

00:34:31

But as you add in additional cities, a fourth city, a fifth city, sixth city, it starts to… The compute complexity becomes, yeah, I can’t remember exactly if it’s polynomial or exponential, but it’s a crazy… The complexity gets very, very hard, very fast. But yeah, somehow it’s a super vague memory, but I feel like somehow quantum computers can do that instantaneously, solve that kind of problem.

Amira Abbas: 00:34:55

Yes, this is a good question to bring up. So I don’t think so. So, let me maybe cross this into-

Jon Krohn: 00:35:00

Oh, do you know what I might have been thinking of?

Amira Abbas: 00:35:00

Yeah.

Jon Krohn: 00:35:05

I might’ve been thinking of genetic computing.

Amira Abbas: 00:35:08

Oh, maybe, yeah.

Jon Krohn: 00:35:10

Where there’s… Yeah, I might be messing things up. This is one of those classic things where you get-

Amira Abbas: 00:35:16

No, not at all. I think this is a really good thing to bring up because even myself, I thought, oh, quantum computers can encode information, like an exponential amount of information into a small number of qubits. So you would think that these very large combinatorial problems are something easy for a quantum computer to solve, in particular like this traveling salesman problem. Because like you say, as you add in more cities, the number of combinations of cities you can visit starts to grow exponentially, so the problem becomes very difficult to find what’s the best route. We hoped that, okay, well, a quantum computer can cast all these variables into a state of superposition, and maybe we can understand in superposition what’s the best distance or something like that. But it turns out to not be so straightforward. In fact, this traveling salesman problem is known to be an NP-hard problem under certain assumptions.

00:36:12

NP-hard means basically requires a ton of resources to solve regardless of the system you’re looking at, whether it’s quantum or classical. Maybe I should also say right now at the get go that we don’t believe… So NP-hard problems are the hardest problems we can face in computer science. So these are this traveling salesman problem, for example, and a few others. We don’t believe that quantum computers can solve NP-hard problems in an efficient manner. So we don’t believe that they will give us polynomial time algorithms to solve NP-hard, like really hard, problems. So this is unfortunate. A way to see this is also quite straightforward. There’s one particular problem in computer science that’s called the Boolean satisfiability, so SAT, S-A-T, the SAT problem. SAT is known to be a very hard problem for a classical computer to solve. In fact, we don’t know any sophisticated ways to solve this problem other than just brute force search.

00:37:19

So this is the best we can do classically, and this takes exponential time. Now with quantum computers, we know a very nice result called Grover’s search algorithm. So this is a search procedure that gives us a quadratic speed up over classical search, unstructured search. So we know through the lower bounds of Grover that we can apply brute force search only at a quadratic speed up with a quantum computer. In principle, if we can only solve SAT quadratically faster, we can’t really hope to do anything interesting with the SAT problem. SAT is at the foundation of all NP-hard problems in computer science. So most NP-hard problems derive themselves from SAT. So it’s a really depressing statement. It’s like, oh, well, we have all these tons of hard problems, but quantum computers can’t really do anything interesting. But this is just a belief, it’s not a proof of course.

00:38:19

But it doesn’t mean that other problems aren’t out there that quantum computers can provide exponential speedups for, and they’re still useful. So for example, factoring, which is not NP-hard or not believed to be NP-hard, but it’s still… We don’t have any practical classical algorithms that can solve this efficiency. So yeah, I hope this sheds some light on this area.

Jon Krohn: 00:38:41

Totally. Everything you’ve said has been so fascinating and so interesting and novel to me. Yeah, thank you so much. Amazing. Okay, cool. So limited applications today where we know that quantum computing provides some big improvement over classical computing, the one example being prime factorization. But then with quantum machine learning, why are people excited about that? Why were you excited about that? Why have you spent so much time researching it? Yeah, what is the potential in quantum machine learning? It sounds like you’re saying, ” Well, we don’t yet know of many other applications of quantum computing,” and yet it seems like there could be something here with quantum machine learning. Why is it exciting? Why are people pursuing that?

Amira Abbas: 00:39:36

Yeah, so maybe let’s start by rewinding a little bit as to what quantum machine learning is, or what do I mean when I speak about it, because I think there are a lot of different ways to think about it, and then we can motivate a little bit some of the successes. Because you’re right, I mean, from a complexity theory point of view, this very high level point of view, it seems a bit counterintuitive when I say, “Quantum computers aren’t good for hard problems.” But you’ll see that I think there are still pockets and areas that quantum computers can be super useful for, and one of them is machine learning. So when I say quantum machine learning, there are a couple of ways to think about it. The first is, well, in regular machine learning, we’re always concerned with a data set and we want to do something with that data.

00:40:21

We maybe want to learn some structure, or we want to apply some labels to a new piece of information that comes in from a distribution that looks like this data set and so on. So there’s usually some notion of data. In the quantum machine learning picture, we can say, okay, maybe this data is something classical like our pixels on a picture that we put into a vector, maybe it’s just a classical vector. But there’s also this idea or notion of quantum data. What this means, we can talk about a little bit later. But basically when we have data in this quantum machine learning picture, we need to encode it into a quantum state. So we need to somehow get it into the quantum computer to be able to process it. So this is step one. Step two is then once it’s in the quantum computer, once it exists as a quantum state, what do we do to it? How do we evolve the quantum state? How do we change it? How do we apply operations to it that’s meaningful?

00:41:20

And then the step three, the last step of course, is how do we measure our quantum system to get an output that is useful for us in machine learning? If we want to label the picture cat or dog, how do we interpret a bit string measurement out of our quantum system such that it tells us it’s a cat or a dog? There are many different ways to do that. There are many different ways to encode data, to evolve it and to read out. So when people do quantum machine learning, 99% of the time they’re trying to study how these aspects work and how we should do them under certain assumptions because it’s almost impossible to say this is a blanket approach to encode every piece of information. This is a blanket or approach to evolve every piece of information, and this is how you should read it out. It really always depends. It depends on the structure of your data, your specific machine learning task and what it is you want to do.

00:42:12

So quantum machine learning is really these three ingredients and understanding how these three things work, how to put the data into a quantum state, how to evolve it and how to understand it in terms of a label for machine learning tasks. So I hope that makes sense, and then we can talk about-

Jon Krohn: 00:42:28

It makes perfect sense.

Amira Abbas: 00:42:28

Okay, good.

Jon Krohn: 00:42:32

Yeah, I think I’ve got it. Yeah, so with quantum machine learning, we need to first convert our data into a quantum state. I do definitely want to dig later. I’ve made a note, and I’m not going to let it slide about this quantum data idea, which is completely novel to me. But yeah, so start with converting data into a quantum state, and then I wrote down perform operations, but you actually used a different verb or noun.

Amira Abbas: 00:43:05

Yeah, evolve.

Jon Krohn: 00:43:06

Evolve.

Amira Abbas: 00:43:06

But performing operations, exactly the same thing, right? Yeah, so let’s see that. Yeah, I think it’s the same. You can think about it the same.

Jon Krohn: 00:43:07

Evolve sounds cooler. We’re performing operations in classical machine learning all the time. I want evolution, that sounds way cooler. Then third step is then converting back from the quantum state into something that we can easily interpret like a vector of pixels or whatever.

Amira Abbas: 00:43:31

Exactly. Yeah, that’s exactly right. Okay, so then to your next point about now, how would this be advantageous? Here there are a couple of interesting things or results that have come out. I think probably the most intuitive one to a machine learning or data science audience is this idea of kernel methods and support vector machines, which quantum computers seem very naturally suited for. So for those of you, I just recap very quickly, the idea of a support vector machine and these kernel methods is usually your data is given to you in a very messy sort of space or setting. So let’s say you’re given a ton of information and now you want to classify this information into two subsets, let’s say cats and dogs, for example. So you can try to fit a very complicated classifier to say, okay, these are the cats and these are the dogs, and this is how I classify my data.

00:44:37

But another thing you could do is you could take your data and you could map it to a different space such that it becomes really easy to separate your classes with a very simple classifier like a linear classifier. So just drawing a line between these two data sets, these two subsets in your data. How people typically do this is they take their data and they map it to a higher dimensional space. There in a higher dimensional space, things become easy. You can have a hyperplane, which is, you can think of it as a generalization of a linear classifier that separates your classes. So this is this idea of what a support vector machine does. It takes your data and it maps it by something called a feature map into a higher dimensional space and then applies a linear classifier.

00:45:25

So why is this interesting for quantum and quantum machine learning? Well, because the step one that I told you about, which is taking your data and encoding it into a quantum state, you can actually think of it as this map into a higher dimensional space. So you can think about taking your data and trying to encode it into a quantum state as a quantum feature map. Then you can apply these operations, which can be a linear classifier. So if you figure out a clever way to map your data onto a quantum computer, such that you’re already separating things in a nice way in quantum space, whatever we call it, quantum Hilbert space, then this is super interesting and super useful. Moreover, if you show that this mapping is something very hard for a classical computer to do, then you’re in the money. So there you’ve got a useful kernel or useful, I should say, feature map, and you can use it for these machine learning tasks.

00:46:25

So there have been some interesting results here where people have shown that certain quantum feature maps and these quantum support vector machines and so on, are classically intractable, meaning they’re difficult for classical computers to do. But the one downside is that the data sets that seem to be amenable to these maps are very artificial, so they don’t seem to be naturally suited for data that we have in nature and in real life. So people are still trying to figure out if there’s a natural quantum feature map that suits data sets that we’re interested in. So this is still an open question. So there’s been some proofs that there are some hard things that a classical computer can’t do, but we still need to find some use cases for them.

Jon Krohn: 00:47:13

Data Science and Machine Learning jobs increasingly demand Cloud Skills—with over 30% of job postings listing Cloud Skills as a requirement today and that percentage set to continue growing. Thankfully, Kirill & Hadelin, who have taught machine learning to millions of students, have now launched CloudWolf to efficiently provide you with the essential Cloud Computing skills. With CloudWolf, commit just 30 minutes a day for 30 days and you can obtain your official AWS Certification badge. Secure your career’s future. Join now at cloudwolf.com/sds for a whopping 30% membership discount. Again that’s cloudwolf.com/sds to start your cloud journey today.

00:47:57

Very cool, that was magnificently explained as well. Thank you so much. That was an absolute delight to hear. I guess, the summary point here is that with quantum support vector machines, with QSVMs, while there have been theoretical demonstrations that QSVMs provide, can in some circumstances provide a great speed up over a classical support vector machine solution, that is only the case with data that we aren’t aware of, data that are set up in a way that we don’t seem to observe from some natural phenomenon that that would typically occur and that we would be putting into our models.

Amira Abbas: 00:48:46

Yeah, exactly, yeah.

Jon Krohn: 00:48:48

Well, that’s super interesting. So the quantum support vector machines, so you in particular… This is jumping into our next big topic area. But it’s interesting that you went down the route of quantum support vector machines because you have written a groundbreaking paper called, The power of quantum neural networks, and you co-authored that with some amazing people with a top quantum researcher, David Sutter, as well as a Fields medalist. So this is a Fields Medal is like the Nobel Prize for mathematics. Yeah, Alessio Figalli has a Fields Medal, and you co-authored this power of quantum neural networks paper with David Sutter and Alessio Figalli. So yeah, it’s interesting to me because… And there’s a funny parallel here where today a conference like NeurIPS, Neural Information Processing Systems, it was created in the eighties to study artificial neural networks, which now we typically call deep learning. But there was a period of time in the early part of this millennium, in the first decade of this millennium, where even at a conference like NeurIPS, which has neural in the name, everybody was doing support vector machines.

00:50:23

Then it was AlexNet, the AlexNet moment in 2012 where Geoff Hinton and his team at University of Toronto showed that deep learning is practical for image recognition at large scales. That led to this reemergence of neural networks as the leading approach in machine learning and artificial intelligence for a broad range of problems. Not every problem for sure, but a lot of the AI advancements in the last decade have been made through deep learning through neural networks. So it’s interesting to me that you have this expertise in quantum neural networks, and yet it was still support vector machines, quantum SVM, that you went to an example of first.

Amira Abbas: 00:51:17

Yeah, I think… Yes, so you summarized the history of machine learning quite beautifully. I think the reason people like kernel methods or these support vector machines, however you want to call them, so much is because they’re beautiful to study from a theoretical point of view. So here they’re easy to… Well, not easy, but they’re at least a lot of known things that we can say about machine learning from the support vector machine picture. But neural networks, as you also mentioned, they just work so damn well, right? So they just work for everything we throw at them. They just can do it. They can solve really, really complex problems in almost a human-like fashion. So why? Researchers in machine learning have been trying to figure out from a theoretical point of view why, but it was mostly the empirical success that drove all the theoretical studies that came afterward.

00:52:14

To be honest, I think there’s still so much we don’t know from a theoretical point of view as to why these deep networks work so well. Then you might think, well, why do we ignore kernel methods if we can understand them theoretically? But the downside is we can’t implement them so well. There’s a cost in training and optimizing these support vector machines that scales like something like quadratic in the number of, I think, in the data. So this is quite expensive. I think neural networks, this doesn’t have this quadratic scaling. It’s actually linear, so the cost there is far cheaper. So you can optimize much, much larger neural networks with billions of parameters than you can with these support vector machines. So the reason I mentioned a support vector machine as an example now is because on the quantum side, it fits very naturally in a theoretical framework and understanding what you’re doing, but whether this will be the thing that we use on a quantum computer or not is still open for debate.

00:53:12

My guess is probably not. So then I guess this is a nice natural segue into what are these quantum neural networks? What do these things look like and are they quite similar to the classical neural networks that we know and love? What a quantum neural network is, you can understand it in one sentence, is it’s basically a quantum circuit which consists of certain operations. You can think of them as matrix operations, but these operations are parameterized, so they depend on parameters and just like normal machine learning, you need to figure out how to tweak and train and optimize these parameters to fit your data. So quantum neural networks are these parameterized circuits where now we have to figure out how to optimize these parameters for our machine learning task. So the reason we considered these class of models in this paper that you mentioned, this Power of Quantum Neural Networks paper, is because we wanted to see if they can do anything interesting. Do they offer us anything different over traditional classical neural networks? So this is what we tried to investigate here.

Jon Krohn: 00:54:21

Super interesting. So it sounds like where we could be with quantum neural networks today is that unlike the quantum SVM example, we may not have yet identified… Well actually, I mean you tell me, I don’t know why I’m… So it sounds like with QSVMs we have clear instances of situations, even if the data are not likely to be come across in the real world, we have examples of QSVMs being more efficient than classical SVMs. Is it the case that we’re not quite there with quantum neural networks yet?

Amira Abbas: 00:55:10

Exactly. You’re on the complete right way to think about it, right? Because how did we get there classically? Well, we have computers that we can do interesting, fascinating things with. Thanks to things like backpropagation we can scale up neural networks to billions and trillions of parameters now where we can train and optimize very large models at scale. But on the quantum side, we can’t do this yet, right? So in fact, a lot of the research that I did while I was a student researcher at Google was really trying to see if we can get backpropagation scaling of resources on these quantum computers. It’s really tricky because of this problem, that information is so delicate on a quantum computer.

00:55:56

So we can’t really say we have this notion of what our next best quantum model is going to be, our sort of quantum neural network, which is, I’m using it a little bit differently now because people use this term for parameterized models. But will it be the next best neural network? Probably not, but we can’t really run any impressive empirical studies yet because our quantum computers are still so small. So it’s unfortunate that we can’t replicate the same sort of classical machine learning success with just try and see experiments so we have to try and maybe analyze things a little bit more carefully from a theoretical point of view because this is the only option we have right now.

Jon Krohn: 00:56:41

Right, right, right, right. So the work that you’ve done, so things like your Power of Quantum Neural Networks paper, they are like theoretical machine learning more than-

Amira Abbas: 00:56:54

Yes, exactly.

Jon Krohn: 00:56:55

… empirically. And so what you’re saying with a paper like this is as we begin to scale up quantum computers, orders of magnitude more from where we are today from hundreds of qubits to maybe millions of qubits or something at that kind of scale, then we will be able to solve quantum neural networks. We’ll be able to find optimal parameters with a quantum neural network and these kinds of advantages will emerge then.

Amira Abbas: 00:57:29

Well, I hope so, but we might still encounter some barriers. So for example, going to these large scales where we have billions of parameters is essential for quantum machine learning to be able to compete with large language models and things like this so we have to be able to scale our quantum machine learning models up. But we’re going to have to be able to train them as efficiently as we can train neural networks. This is where this idea of backpropagation comes in. Can we replicate backpropagation? Which I’m sure most people listening here know that backpropagation is a recipe to compute gradients in a very efficient way of these super large neural networks. And this gradient information allows us to go back to our neural network and change our parameters so we get a better function, we get a better fit to our data.

00:58:21

But on a quantum computer backpropagation is not so easy because how do we kind of reuse information to compute gradients efficiently? We can’t really peek into our circuit at different points and extract information because as soon as we do our information collapses, we lose it. So we’re going to encounter some barriers when we scale up our quantum models. We need to figure out a better way to optimize them and train them and backpropagation scaling or resources that replicate backpropagation is really hard to do. So something we showed recently is that backpropagation scaling is pretty much impossible to achieve unless you have something called quantum memory available to you. And this is also something maybe if you’re interested, we can dive a little bit more into. But all I want to say is that there are some actual barriers there that even if we have huge quantum computers, it’s not so straightforward that we’ll be able to optimize them in the ways we can optimize neural networks.

Jon Krohn: 00:59:32

Nice. I mean, let’s dig into some of these terms. So quantum data, quantum memory. Feel free to pick which one you think is the natural one to define next.

Amira Abbas: 00:59:43

Sure. So I’m glad we get a chance to talk about this because I think this is a rather, well, at least to me, this is a new area and this is super exciting because a lot of new results have come out recently by some researchers at Caltech and Google that show some really positive things in this direction. Okay, so what is quantum data? Well, I mentioned that you can think of a quantum state as a vector that encodes some information for your machine learning tasks. So maybe it encodes some information about, let’s say your data set consists of properties of cats and dogs like their weight and their height and their color and so on and whatever. And you figure out a way to encode this information into a vector.

01:00:35

So you can pretty much list, let’s say the numbers in a vector somehow. And then your first step is to encode this into a quantum state. So there are lots of different ways, but let’s say you apply some operations in your quantum computer that depend on the values of these vectors. So let’s say you’re doing rotations about an angle that depends on the weight of your cat or something like this. It seems very artificial, but this is actually something people do. They encode information like this. But what I mean by this idea of quantum data is that you make no assumptions about how you encode your classical information into a quantum state you’re just given quantum states. You’re given quantum states by some physical process that you make no assumptions about.

01:01:23

And you might then ask, “Well, where does this come up in machine learning?” And the answer is, I don’t actually know. But I think it’s relevant for a lot of physicists and chemists even, they run a lot of experiments in the lab and what comes out of these experiments are data that they then go and process for various reasons. So you can imagine some sort of physical experimental setup that contains data that comes to you in the form of quantum states. And so this is what I mean by quantum data. Now what I mean by quantum memory is, well, if you have a way to take these quantum states that come to you from your experiments and efficiently transduce them, so efficiently store them in quantum memory, so in memory, right? So you’ve got states coming to you and you can house them. Then you can house them and directly process them on a quantum computer. This is an idea of quantum memory.

01:02:22

This is not something so farfetched. This is something that people are working on. This idea of quantum senses that basically take these states that come to you and then kind of process them thereafter on a quantum computer. And why am I telling you this? Well, I’m telling you this because these kinds of experimental setups, when you have quantum data given to you that you make no assumptions about and you can use them immediately on a quantum computer, there, there are interesting learning tasks that people have shown you can do. You can perform learning tasks there that you need exponential resources to do otherwise, classically. If you just have no access to quantum memory, then this makes this task quite hard. So this might sound a bit convoluted, but all I’m trying to say is there, there’s exponential separations that exist for certain learning tasks.

01:03:17

So this idea of quantum memory is actually quite powerful and something that I think a lot of people are working towards experimentally and theoretically there are known results for learning tasks that are really interesting. So I can also provide some links to some papers if anybody wants to read about this. These learning tasks are of course right up our alley in machine learning where we’re often trying to learn or approximate something with some goal in mind. So I think this is a really interesting area and how this quantum memory will look, again, I don’t know. I think this is something for experimentalists to comment about and think about. But from a mathematical point of view, this is actually also super beautiful, why we get extra power with having quantum states in memory and being able to process them.

01:04:10

So remember I spoke initially about when we measure a quantum system, we collapse our information to something classical. So we kind of destroy our quantum information. But when we have multiple kind of states in memory, we can design a measurement in a very clever way that it doesn’t entirely destroy our quantum information. So we can measure our system without destroying all our information. If we do that, we can then reuse it, we can reuse our information. So this is the trick to having access to quantum memory is that we can start to do more interesting things. Theoretically we can start to design measurements in the lab such that we don’t destroy our quantum information entirely. And so for machine learning, this is really nice because well, if we can reuse our quantum information, maybe we can track things in between and reuse stuff and create efficiencies and learn things more efficiently. I hope that makes sense.

Jon Krohn: 01:05:14

Nice. That does make sense. And here’s a really dumb question, but does quantum memory somehow resolve the issues that you were mentioning right from the beginning of the episode around when we try to measure qubits, they collapse into a known state. Does quantum memory somehow get around that and allow us to maybe, and you were mentioning how we can’t copy information, but maybe we can’t copy quantum information, but maybe somehow quantum memory allows us to do that to some extent?

Amira Abbas: 01:05:50

This is a really great question and so I should probably say there’s a trade off that exists between how much information you can extract from your system and how much you disturb your quantum state, right? So how much information you destroy. So there’s a trade off there.

Jon Krohn: 01:06:09

Right.

Amira Abbas: 01:06:10

And when you have access to quantum memory for multiple quantum states in memory, you can kind of design things in a way that you can extract more information whilst not destroying too much. So you can kind of extract more than you can with just one state at a time. So this is the intuition, and so it kind of resolves a little bit this idea of being able to reuse information because now you can design measurements in a bit of a clever way. So it’s strictly more powerful than just having one state at a time, but it doesn’t completely resolve it because indeed you still need to measure and you still need to extract and you still need to destroy or disturb your state. So the idea from a research point of view is how do you do this in a very elegant manner that you extract enough information out with the resources you have?

01:07:04

So a lot of people are trying to say, “Well, if I just have a reasonable amount of quantum memory, can I achieve some learning task in an efficient manner? Something that doesn’t require exponential time,” something like this. And it turns out there are some learning tasks for which this is plausible. You can solve things in an efficient amount of time if you have a reasonable amount of quantum memory. And if you don’t have this quantum memory, then you run into costs like exponential time. So this I think is quite cool.

Jon Krohn: 01:07:35

That is super cool. Okay, so this reminds me of an analogy that someone, a teacher, it might’ve been a high school teacher said to me so long ago, which is… Yeah, it must’ve been in a high school physics class or something. “That an analogy to think of for why it’s so difficult to measure aspects of systems that are so small is that it would be like, if you had a puddle, a little puddle on a sidewalk and you used a thermometer the size of a skyscraper to measure the temperature of that puddle, you’re going to end up changing the temperature of the puddle with your gigantic thermometer.” So the closer you try to get to the puddle, the more you mess up how accurately you’re getting the temperature of that puddle.

Amira Abbas: 01:08:28

Indeed. I think, yes. And I think if people are interested in quantum mechanics, there’s this whole field of open quantum systems, which I think fits very nicely in what you’re describing, right? In reality we don’t have these perfect closed systems that we can just implement in a lab. There’s interactions within the environment, like this puddle thermometer analogy is perfect for that. We actually need something more realistic, and this is the field of open quantum systems that tries to understand if you have interactions within environment, how do you model these more accurately? There I think things very quickly become quite complicated, but indeed I think it is relevant.

Jon Krohn: 01:09:15

Right, right. I’m really scratching at some vague old memories here, but I think the thing that I was just describing with the thermometer and the puddle was related to the Heisenberg uncertainty principle. Anyway, I don’t know how-

Amira Abbas: 01:09:34

Maybe?

Jon Krohn: 01:09:34

… relevant that is for you.

Amira Abbas: 01:09:38

Maybe, I don’t particularly see. I guess the uncertainty principle is also I think quite straightforward to understand. If you’re trying to zero in on one component of your system, then you kind of lose information about another and vice versa, right? I think you can also relate this a little bit to this information disturbance trade off that I mentioned. If you want to kind of get a lot of information out, you’re going to disturb your state quite a bit and vice versa. If you don’t want to disturb your state, you’re not going to get that much information out. So I guess in some sense it’s similar.

Jon Krohn: 01:10:18

So all right, what’s next for you with your research? So I guess actually, what’s a main takeaway from the quantum neural network stuff or other papers that you’ve had that you think would be relevant to the audience? And then what’s exciting for you now? What are you working on now? And so what do you think what breakthrough might be next?

Amira Abbas: 01:10:43

Sure. So personally, I mean I joined the University of Amsterdam and their quantum computing group there is called QuSoft. I joined them because they’re one of the strongest teams. It was a no brainer. They’re one of the strongest teams from the point of quantum theory and in particular complexity theory. So I mentioned this a bit earlier, it’s the study of the hardness of problems and where can quantum computers be useful in this idea? So quantum complexity theory is something I have now become really interested in and I’m in no way an expert, but I think if we want to be able to say something rigorous even about the types of machine learning problems that we are going to hope to solve or make more efficient with quantum computers, we have to understand things from a theoretical computer science point of view, and this is where this complexity theory comes in.

01:11:35

So I joined this group because they’re quite strong in this area and I would really like to grow my expertise there and then see what we can do potentially for machine learning and so on. But I think there are a lot of open questions and things that researchers can look at right now. So for example, I mentioned this problem of trying to train these parameterized quantum neural networks at scale. We need to find a way to be able to do gradient descent in an efficient manner like we can with neural networks. So there was this recent work that we did on quantum backpropagation, which addresses this question. In there there’s a ton of open questions like maybe we can find special types of quantum models that train well that train efficiently and are useful for quantum machine learning. So we have a couple of ideas in there that if people are interested in, they can just, I think look at the conclusion section and see, “Okay, these are some areas that we think might be interesting.” So trying to train these models.

01:12:38

I do still think that this kernel support vector machine area is something that is interesting and something there that can be found that’s quite useful. To find an interesting feature map that is relevant for a particular type of dataset. So I always encourage people to start there, especially if they’re coming from classical machine learning, I think this is the most natural way to think as well in terms of quantum machine learning. So I think this is rather cool. And I’ll make the pitch again for quantum memories. So having access to multiple copies of states at a time and figuring out what it is we can learn with this added ability from a theoretical point of view, I think is understudied and I think there’s a lot to say there.

01:13:22

So in particular, for example, Robert Huang, Jarrod McLean, and others from Caltech and Google, they have had a series of papers that show in a machine learning setting, there are learning tasks for which we can solve efficiently with this added ability of quantum memory that we can’t do if we don’t have it. So I think this is a really interesting direction and I think it’s probably what I would like to look into a lot in the next year or so.

Jon Krohn: 01:13:51

Very nice. It sounds super exciting. And then I would’ve mentioned this in the intro to your episode, which I don’t think I explained this to you before we started recording. But I script an intro and an outro after we record our conversation and in that intro I talk about your background a bit. And so prior to getting into quantum physics, you worked in finance. So your undergraduate degree is actually in business and actuarial science and you got your CFA, which is by all accounts, quite a challenging thing to do. Then you worked in the investment industry and so what happened that you were like, “You know what?” Because we’ve been talking this whole time and you haven’t been like, “I’m going to use quantum support vector machines to figure out how to do accounting better.” So what happened? Were you aware of quantum computing, quantum machine learning in the background or where did it jump up from that you were like, “This is what I need to spend my life doing?”

Amira Abbas: 01:15:04

This is always quite funny to talk about and I think a lot of people don’t understand the decisions until I explain them. I think growing up I don’t think I really even fully understood what physics was, let alone quantum physics. I think in high school it was my worst subject actually. I didn’t understand anything about it. It was always super intimidating for me. But I liked math, so I thought, “Okay, to get a good job,” my parents also encouraged me to go a little bit into finance and so I did actuarial science as a degree and I did the next step was to get a job. And don’t get me wrong, I enjoyed it a lot. I liked working in the investment industry, but I just kept wanting more and particularly more of quantitative fulfillment.

01:15:59

So I always liked equations and things like this and so I started learning a little bit. This is how I found machine learning, literally just on YouTube with podcasts, things like this. I started listening to these things and getting interested and then somehow I eventually went down the rabbit hole of, I think I YouTubed a video about the Schrodinger equation. And this was not on purpose. I was reading up about the Black-Scholes equation, which is used to model financial instruments and the lecturer was a physicist and he related a little bit to the evolution of a particle through space and time and then used the Schrodinger equation and I could follow the math. And then this just was like, “Whoa, this is so cool.”

01:16:44

So I went down this rabbit hole of, “Okay, what’s the Schrodinger equation, what’s physics?” And then I found quantum physics and it was all just very lucky. Right time, right place. I wrote to a ton of professors in South Africa where I live and said, “These are the skills I have. Can I please come study physics with you?” And one of them wrote back, Professor Petruccione, he’s now in Stellenbosch. But he accepted me as a student and I went back to live with my parents and I then just got consumed by this idea of quantum physics. And to be honest, back then there was no real obvious direction. I don’t think there were a lot of jobs in quantum physics. So my family was super concerned, how am I going to make money? How am I going to feed myself? But I think in the end it worked out. I was lucky with timing and I think it’s okay so far. So this is how I went to this direction.

Jon Krohn: 01:17:39

Amazing. That’s such a cool story. I was smiling ear to ear the whole time. It’s so great to be able to find a passion like that, and I get the itching for more. My sister who’s actually at the time of recording, she’s been staying with me here in New York for a couple of days, but she lives in Toronto and she’s worked in finance for many years. She did an undergrad in financial math and has been working as a trader for many years and now is in the ESG space. But she had the similar itch to always be doing the most complex derivatives trading possible because I guess people like you and her have such a desire to be constantly pushing the envelope of your mind and digging into something deeper and building upon the foundations that you have. And I think in finance you often completely, in terms of practical applications of finance, you start to run into limits of how abstract or complex the math can be and still be useful and make money.

Amira Abbas: 01:18:54

Exactly. I think the last part is important about what you said was the things that I wanted to do, I don’t think anyone in finance caress about that. And that was the problem I think was, I was thinking very abstractly and companies of course have purpose and I just wasn’t aligned to this anymore.

Jon Krohn: 01:19:14

Theoretical finance, I don’t know, you don’t hear about that too often these days.

Amira Abbas: 01:19:18

That sounds nice. That sounds nice. But then you’re probably limited to universities, I imagine.

Jon Krohn: 01:19:25

Let’s imagine everyone has all the resources they need to clothe themselves and be secure and have food. It’s a nice theoretical finance idea. So very cool. Let’s jump now to audience questions because we had a lot of interest in you coming on the show. So for our listeners who aren’t aware, for some guests that I have coming up, and I don’t do it for every guest, I don’t have an exact process for this, but I basically think ahead to who am I interviewing next week. And is this somebody that if I’m like, “This is the topic that I’m thinking of covering, or this is their background, is it likely to elicit a lot of questions, a lot of interest?” And in your case, it very much did. So we got several hundred reactions from people on LinkedIn, tens of thousands of impressions, dozens of comments, and so some amazing questions came up from the audience.

01:20:29

Nice, so our first question here is from Annika Nel, and she is actually from Cape Town. So from your part of the globe, Cape Town, and you mentioned Stellenbosch University there recently as being the place where there was a professor that first let you move back in with your parents and do some physics. And so Annika actually, she studied physics and math at Stellenbosch and computer science as well, now works as a backend developer in Cape Town. And Annika is wondering, for people looking to do their post-grad in, it says this field, so I’m assuming quantum machine learning, how does somebody get the right background? I guess they watch one YouTube video on the Schrödinger wave equation and they’re good to go.

Amira Abbas: 01:21:30

Oh no, I think, well that’s the spark and then the rest is a lot of sleepless nights, hard work and eating and breathing quantum physics till things start to click, right? No, so I think for people in South Africa, I can immediately say, okay, well Francesca’s Group at the University of Stellenbosch, if you’re interested in quantum machine learning, this is the way to go. But for other people in other countries and other places, it’s not so easy to always figure out who to contact and what to do. So if you’re interested in quantum machine learning, I’d say start by first figuring out what it is you’re interested in the field, within the field because it’s quite broad. So there’s a beautiful textbook… now I’m again going to give a shout-out to my professors. So there’s a book called Machine Learning with Quantum Computers that was written by Maria Schuld and Francesco Petruccione who were my PhD supervisors.

01:22:30

But this textbook is absolutely beautiful. It covers almost everything there is to cover in quantum machine learning. All the aspects, all the different types of things, all the moving parts. And it’s also super digestible for someone who is not a physicist. So someone like me, for example, I wasn’t a physicist by background, but I could pick up this book and I could kind of make sense of it after a couple of reads. So I’d recommend starting with something like this. And within this textbook there are references for each section and each thing. So if there’s something in there that piques your interest, you can kind of look at the reference and see the paper and see the authors of the papers. And there you can start to gauge, you’ll see immediately some commonalities in whose writing the certain types of research on the topics that you like and just reach out to them, directly.

01:23:20

I mean, academics in particular are super approachable. It might seem strange to just send somebody a random email if you’re coming from the investment industry or any kind of industry. But in academia, this is totally normal. Just reach out and say, “Hi, I’ve read your work and I have a question about it and I want to study it, or I want to do my post-grad.” And nine times out of 10, if you structure the email appropriately and positively and you show your enthusiasm, you convey that spark of interest, someone will help you. So I think this is a way to go, and this is kind of like what I did. I wrote emails to everybody, even though nobody knew who I was. I was totally random. I had no skills, but I was persistent. So these are the two things, be polite, be persistent, and find research like this.

Jon Krohn: 01:24:09

Positive.

Amira Abbas: 01:24:13

Yeah, and positive.

Jon Krohn: 01:24:13

Polite, persistent, and positive.

Amira Abbas: 01:24:13

Three P’s.

Jon Krohn: 01:24:14